Auto Scale a Cluster.

Easy to follow guide on how to scale a cluster on RONIN.

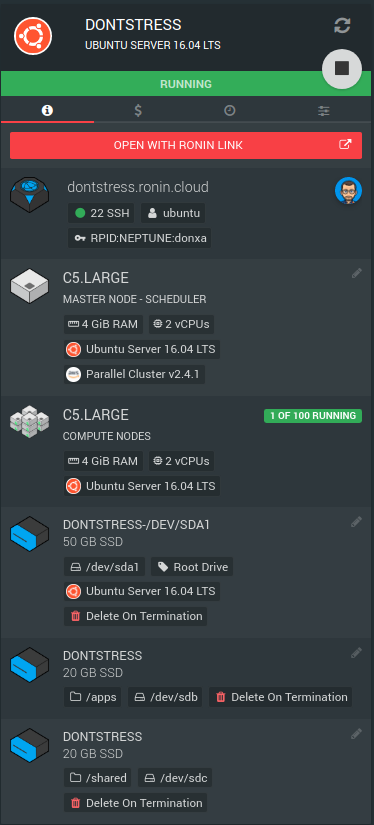

Create a cluster. In this example we will use the following configuration:

- Ubuntu 18.04

- Slurm scheduler

- c5.large instance types

- 1 Min & 100 compute nodes as the maximum.

Once your machine is up and running, open with RONIN LINK and launch a terminal or shh into the machine.

Now we are on our cluster, take a breath, you just launched an auto-scaling cluster in about 8 minutes. Its all yours and you will be the only one in the queue...ah nice. Surely that's worth a tweet :)

Ok, lets just jump into our shared apps directory. This will be shared across all the compute nodes when they are launched by the auto scaling.

cd /apps/Spack is installed on all our clusters and provides a loads of applications for more info see the spack website.

Now install the Stress application via Spack. Spack will install this application and all its dependencies in the shared "/apps" folder...how awesome is that?

spack install stressCreate our "stress" script. This will be our "job" we run in the cluster.

vim stress.shPaste the following into the file and save it:

#!/bin/bash

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=1

spack load stress

stress --cpu 2 --timeout 300s --verboseThis script will stress out the 2 cpu for 5 minutes.

Make the stress script an executable.

chmod +x stress.shRun our script on Slurm

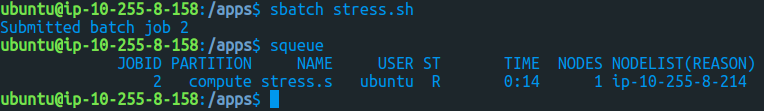

sbatch stress.shNow give it a second or so and check the queue

squeueYou should see something like this

Run this a few times to load up the scheduler with a "job-array".

sbatch -a [1-25] stress.shsbatch -a [1-20] stress.shSit back and don't stress.

You can check the Slurm scheduler by running this command:

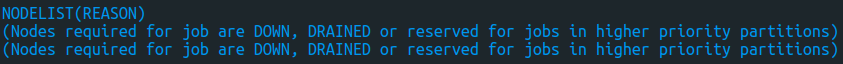

squeueWe can now see there is not enough compute to run these jobs.

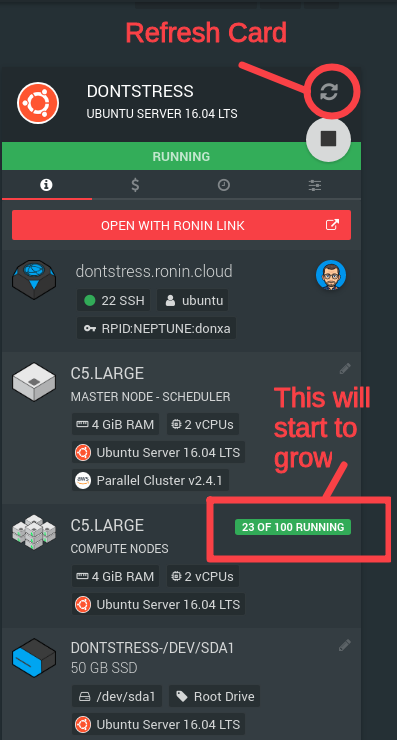

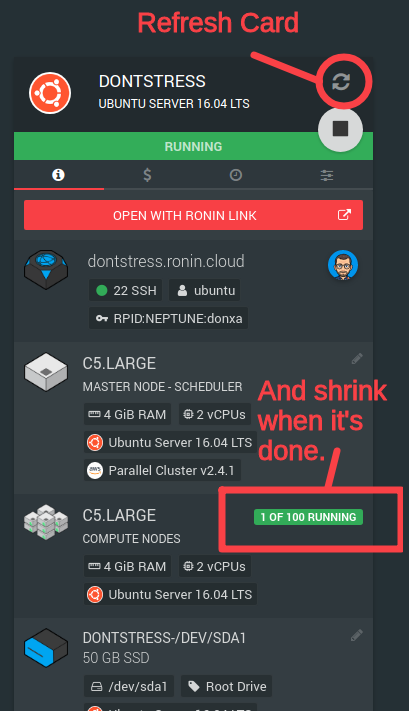

Go back to RONIN and refresh the machine card. The number of compute nodes should be growing. (It may take a minute or 2)

If we look in our terminal and run:

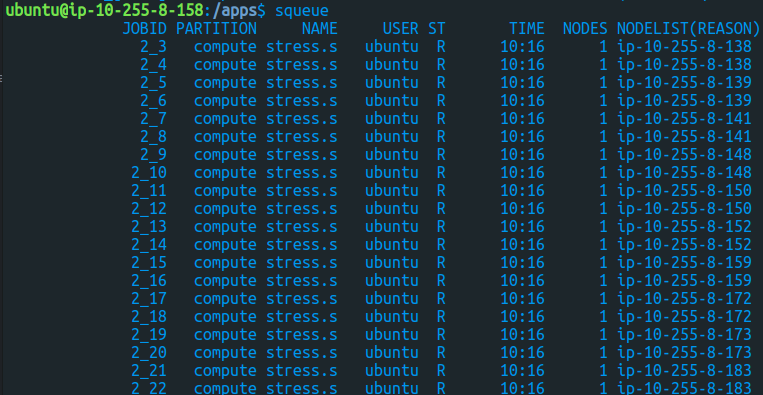

squeueWe should see some jobs being allocated.

But wait, there is more!!

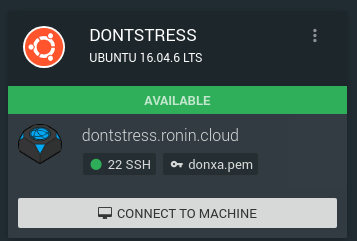

In our desktop application RONIN LINK open. Click the "Connect to Machine" button. If you don't see this button on your RONIN LINK you really should update it here.

Scroll down and click the "Link" button on the Ganglia card.

This will ask you for your local computer's password because we are trying to map a port below 1024. Enter your laptop or computer's password.

You will now see a tab in your browser open with some really "cool" 90's graphs.

This is your monitoring tools for you cluster. You can learn more about Ganglia here or ask the RONIN Community.

Your cluster will also scale back down when it has run all the jobs.

Welcome to the club, you are officially a nerd!