Parallelizing Workloads With Slurm (Brute Force Edition)

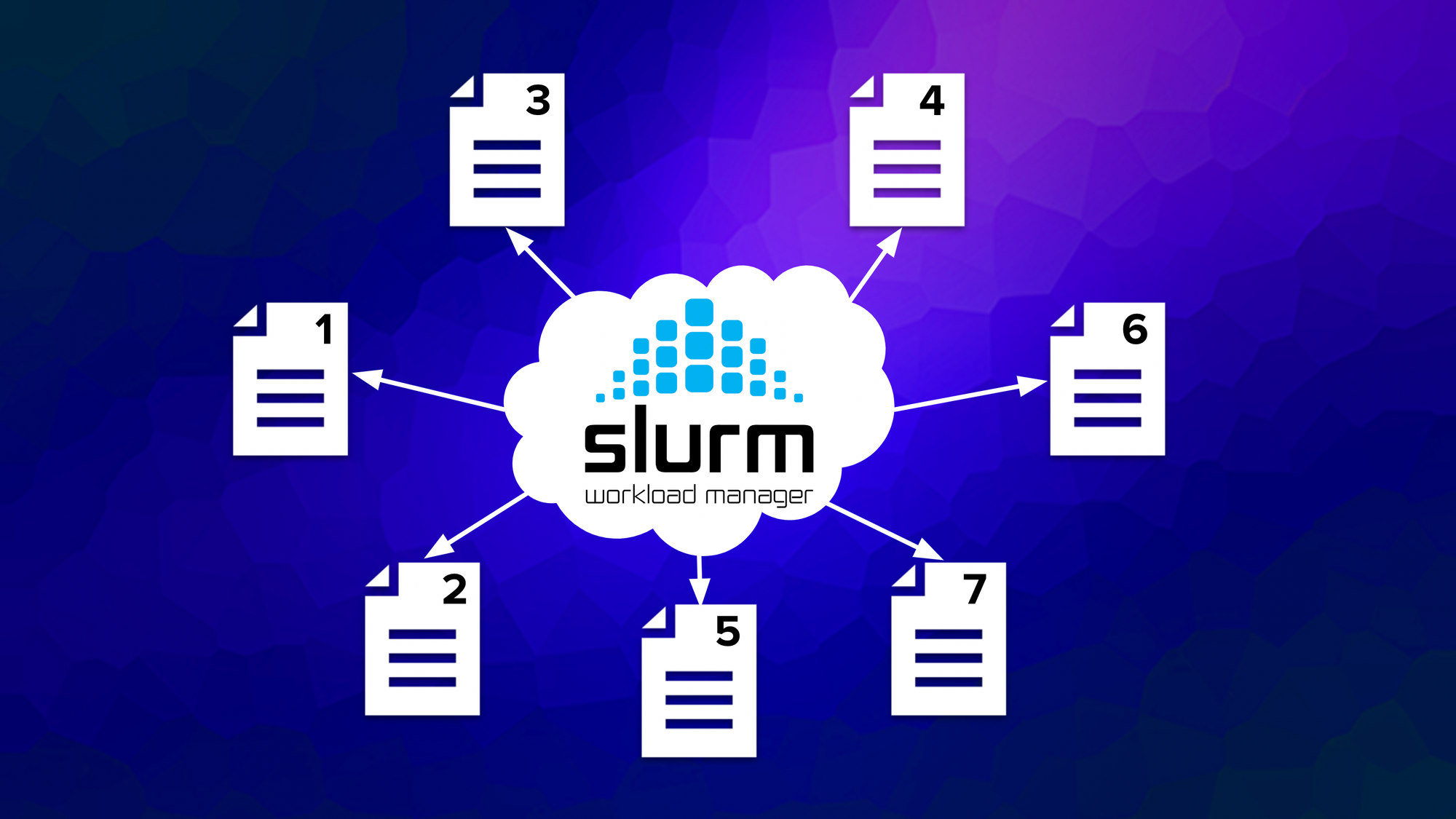

One of the reasons researchers turn to clusters and Slurm is to run many independent jobs. Recently, we posted about how to use Slurm job arrays to do this. Here we describe some additional intuitive ways of getting the job(s) done.

One of the reasons researchers turn to clusters and Slurm is to run many independent jobs. Recently, we posted about how to use Slurm job arrays to do this. A job array is a way to group many jobs with the same compute and memory needs together so that the scheduler can be more efficient. However, you can also submit jobs independently. Often, the issue comes down to how to script the job submission in a way that makes the most sense. Here we describe some additional intuitive ways of getting the job(s) done.

Method 1. Use Wrap.

In neuroimaging, a popular workflow called FreeSurfer takes several hours per subject to complete and is run on a subject (here, a subject called sub96234) with the following command:

recon-all -s sub96234 -i sub96234.nii.gz -all -qcacheDon't worry about the meaning of the specific parameters. To submit this job to a compute node in slurm, you can either create a Slurm batch script with this command inside and use sbatch to submit it, or "wrap it" using sbatch. The syntax for wrapping a command is:

sbatch --wrap="recon-all -s sub96234 -i sub96234.nii.gz -all -qcache"Besides wrap, sbatch takes as parameters all the things you might put in a Slurm batch script, such as the number of nodes and number of tasks per core. So you can in fact submit a job without actually having to write a Slurm batch script. You can try this using a simple command to sleep a certain number of seconds (here 300 seconds, or 5 minutes).

sbatch --wrap="sleep 300"Suppose you have a file of subjects (in a file called subjects.txt) that you need to process using FreeSurfer. You can write a single script to submit them all to Slurm with wrap as follows.

for s in $(cat subjects.txt)

do

sbatch --wrap="recon-all -s $s -i ${s}.nii.gz -all -qcache"

doneMethod 2. Write one job submission script and submit it with different arguments.

This example and the next are modified from documentation for the FASRC cluster at Harvard. This example shows how to run the bioinformatics software Tophat. The script below expects an argument, $1 that is the passed filename.

#!/bin/bash

# tophat_manyfiles.sbatch

#

#SBATCH -c 1 # one CPU

mkdir ${1}_out

cd ${1}_out

tophat databases/Mus_musculus/UCSC/mm10/Sequence/BowtieIndex ../$1The next step is to write a script that uses sbatch to submit this job, passing in the expected file name each time. See below, the variable FILE is set to each of the FASTQ files that match the pattern *.fq. This variable is also used to specify the name of the stdout and stderr files, so that in the case of an error you can figure out what file caused it.

#!/bin/bash

# loop over all FASTQ files in the directory

# print the filename (so we have some visual progress indicator)

# then submit the tophat jobs to SLURM

#

for FILE in *.fq; do

echo ${FILE}

sbatch -o ${FILE}.stdout.txt -e ${FILE}.stderr.txt tophat_manyfiles.sbatch ${FILE}

doneMethod 3. Parameter Sweep With Environment Variables

Sometimes you need to loop through not just a set of files, but combinations of parameters. Here we show how to do that using the same approach as Method 2, but environment variables to pass the arguments. For a more general way to submit jobs with multiple parameters, see our blog post on submitting array jobs.

#!/bin/bash

# save as "vary_params.sbatch"

my_analysis_script.sh ${PARAM1} ${SAMPLE_SIZE}Then you can do a loop through the values of PARAM1 and SAMPLE_SIZE at the shell, or in a separate script, as follows.

for PARAM1 in $(seq 1 5); do

for SAMPLE_SIZE in 25 50 75 100; do

echo "${PARAM1}, ${SAMPLE_SIZE}"

export PARAM1 SAMPLE_SIZE

sbatch -o out_p${PARAM1}_s${SAMPLE_SIZE}.stdout.txt \

-e out_p${PARAM1}_s${SAMPLE_SIZE}.stdout.txt \

--job-name=my_analysis_p${PARAM1} \

vary_params.sbatch

done

doneConclusion

As with all things in Linux, there are many ways to get your jobs done! We hope one of the methods you have seen here or in our Slurm Job Array blog post is helpful for your workflow so that you can get out there and start scaling!