Modeling Air Quality With RONIN and Odycloud

In this post, we have invited Arturo Fernandez, founder of Odycloud, to describe how he scales research IT support for a variety of air quality and weather modeling problems.

RONIN is designed around a Service Catalog of pre-installed research software, for machines and for clusters. This enables research IT to improve support for researchers and for researchers to build their own software catalogs for scientific reproducibility. Once an application has been installed and optimized for a particular architecture, it can be shared with others so that this work does not need to be repeated. In this post, we have invited Arturo Fernandez, founder of Odycloud, to describe how he scales support for a variety of air quality and weather modeling problems.

Why Use Odycloud AMIs?

High Performance Computing (HPC) has become ubiquitous in numerous fields, and it is now possible to run HPC workloads on the cloud. This gives scientists and researchers the ability to leverage the newest, fastest hardware, often surpassing the performance of on-premise hardware. Cloud infrastructure also makes it easy to save machine images containing the software and its dependencies to recreate simulations at scale or reproduce legacy code while progress marches on.

While this sounds great, the reality is that it can be hard to install and to optimize complex scientific packages on cloud infrastructure because of the myriad factors affecting performance, and the variability in the internal topology of current processors used by cloud service providers such as AWS. Therefore, the installation and optimization of HPC software requires understanding not only the software itself but the hardware on which it will run, along with all the tools required to install software and dependencies. The novice can find these tasks daunting and surprisingly time consuming. Even measuring and comparing performance of an application on on-premises hardware to a cloud cluster, or a non-optimized installation to an optimized installation can take days of work. These issues are particularly true for legacy software, which sometimes will not compile using standard procedures.

Odycloud has put together a set of optimized AMIs for WRF, CMAQ, WRF-CMAQ, and CAMx for different instances offered by AWS. WRF is a mesoscale numerical prediction code able to generate forecasts for scales ranging from hundreds of meters to the whole globe. It has a cumulative number of registered users exceeding 48,000 from more than 160 countries. The Community Multiscale Air Quality Modeling System (CMAQ) is a numerical air quality code able to combine the computation of emission dynamics with the chemistry and physics of the atmosphere. Thousands of users from more than 50 countries are currently using CMAQ to forecast air quality conditions under different atmospheric conditions. In the U.S., CMAQ is used not only by the Environmental Protection Agency (EPA) but also by many state agencies as it is a requirement to attain National Ambient Air Quality Standards (NAAQS) defined under the Clean Air Act. The Comprehensive Air quality Model with eXtensions (CAMx) model simulates air quality over many geographic scales. CAMx predicts the dynamics of inert and chemically active pollutants, including ozone, particulate matter, inorganic and organic PM2. 5/PM10, and mercury among others. CAMx can be used separately or complementing CMAQ and has users in more than 20 countries. The AMIs containing these apps and their dependencies are now available on the AWS Marketplace. To subscribe, all you have to do is to launch an instance from the console and accept the subscription. There is an additional fee for using the AMI so it is important to understand the costs associated with the instance, storage and AMI. In this blog we will focus on discussing performance and cost for CMAQ and WRF-CMAQ.

What About Performance?

The most straightforward approach to measuring performance for CMAQ and WRF-CMAQ is to use the benchmarks prepared by the Community Modeling and Analysis (CMAS) Team.

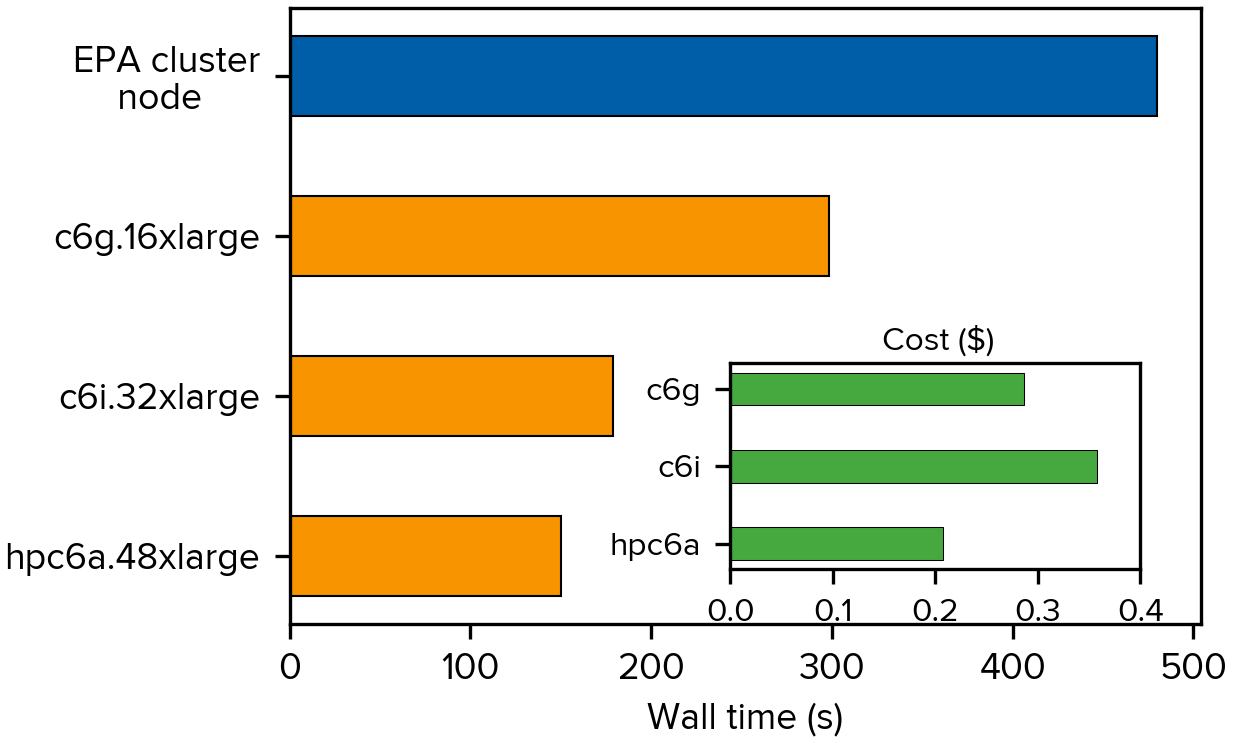

The first benchmark corresponds to a two-day CMAQ simulation (July 1 -2, 2016) over the Southeast U.S. with a grid composed of 80 rows by 100 columns using a 12km resolution. This benchmark has been widely used by the CMAQ community to compare performance between different hardware. Figure 1 shows the wall times for a 24-hour prediction for different instances from AWS along with the measurements from the CMAS Team on the EPA cluster (1 node with 32 Intel Xeon E5-2697A v4 cores). The tested AWS instances are c6g.16xlarge, c6i.32xlarge and hpc6a.48xlarge powered by Graviton2, Intel Ice Lake, and AMD EPYC3 processors, respectively. The results show AWS instances to exhibit lower wall times than the EPA cluster node. Furthermore, the performance of hpc6a.48xlarge is particularly impressive requiring only 150 s to complete the 24-hour prediction. The inset in the figure also shows the cost to perform this benchmark (using the Odycloud AMIs). The hpc6a.48xlarge instance also results in the lowest cost among the tested instances.

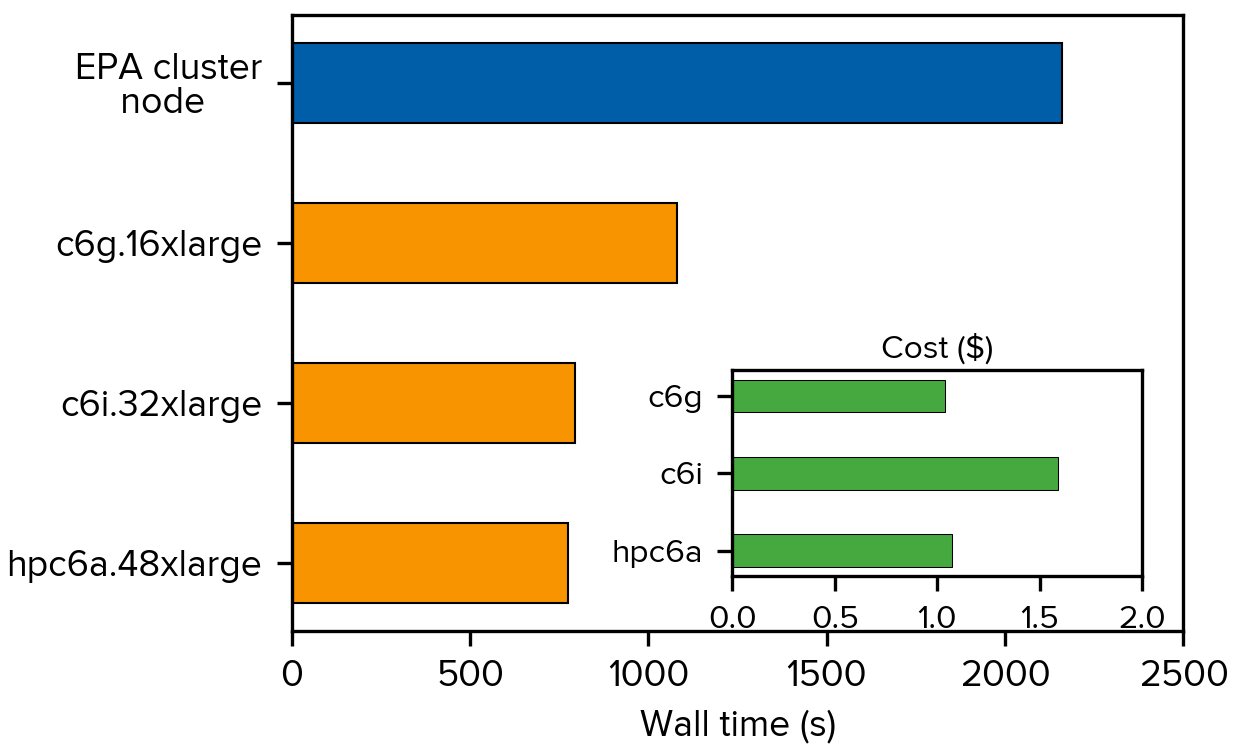

One of the advantages of CMAQ is that it can be coupled to WRF to produce simultaneous predictions of weather and air quality. The downside is that these computations are usually pretty time consuming, so it is important to measure performance with cloud resources. The CMAS Team has also created a WRF-CMAQ benchmark with a similar setup to the U.S. Southeast (2016) CMAQ benchmark. A caveat with this benchmark is that WRF only allows subdomains to become so small, and the benchmark will not run on more than 80 MPI ranks.

Figure 2 shows the wall times for the U.S. Southeast (2016) WRF-CMAQ benchmark with shortwave feedback. Wall times are approximately five times those of the stand-alone CMAQ benchmark as the coupling between both models consume significant computational resources. The performance of AWS instances for WRF-CMAQ is even more impressive than for CMAQ as the Graviton2 instance halves the wall time needed by the EPA cluster node, and the c6i.32xlarge and hpc6a.48xlarge instances require only about 35% of it.

These and other results show that running complex air quality models on AWS infrastructure is not only feasible but that performance rivals and even exceeds that of on-premises hardware.

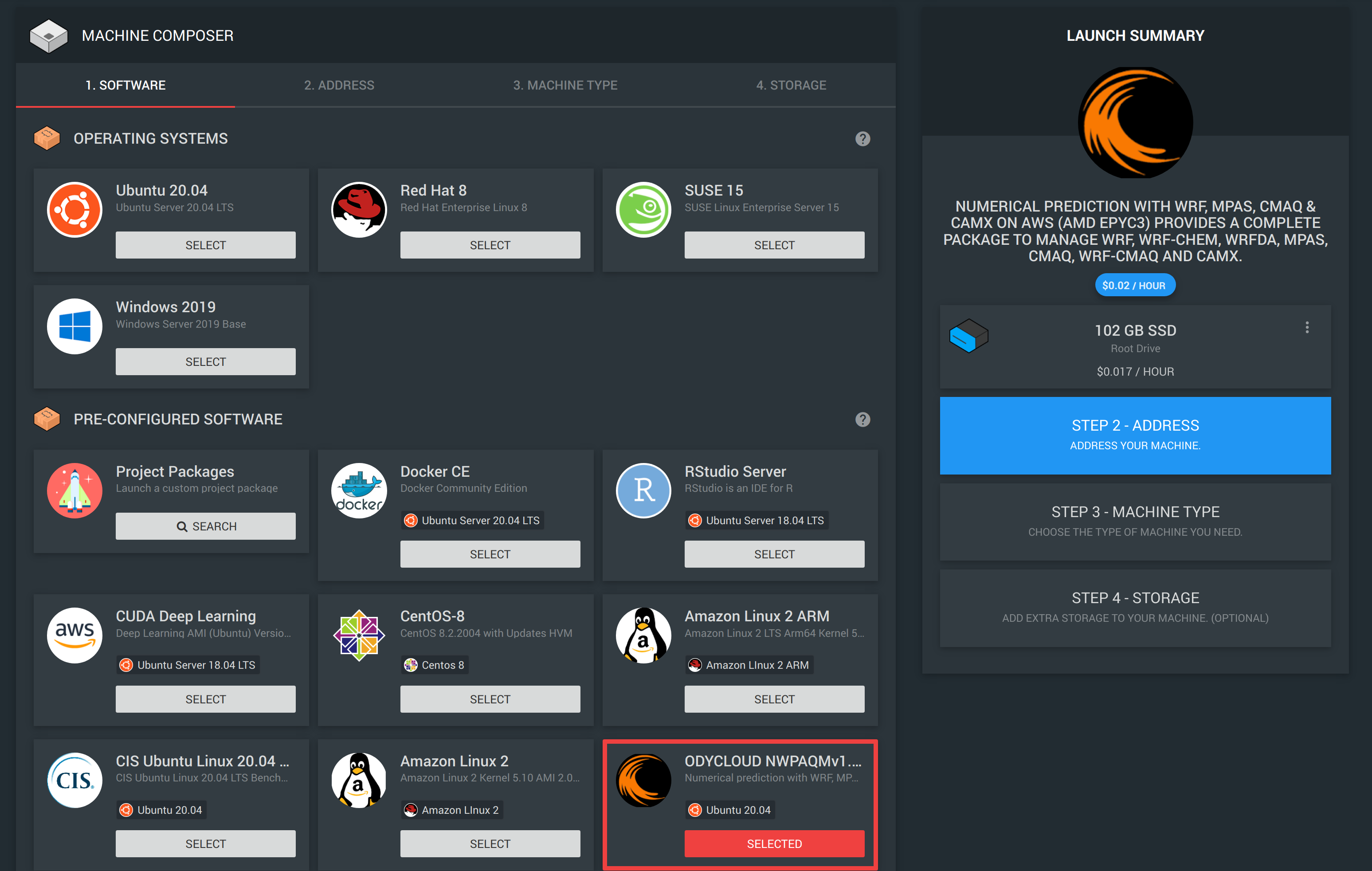

Adding an Odycloud Image to the RONIN Service Catalog

It is easy to use one of the Odycloud images with RONIN. However, a RONIN administrator needs to add it to the RONIN service catalog. The steps to do this are as follows.

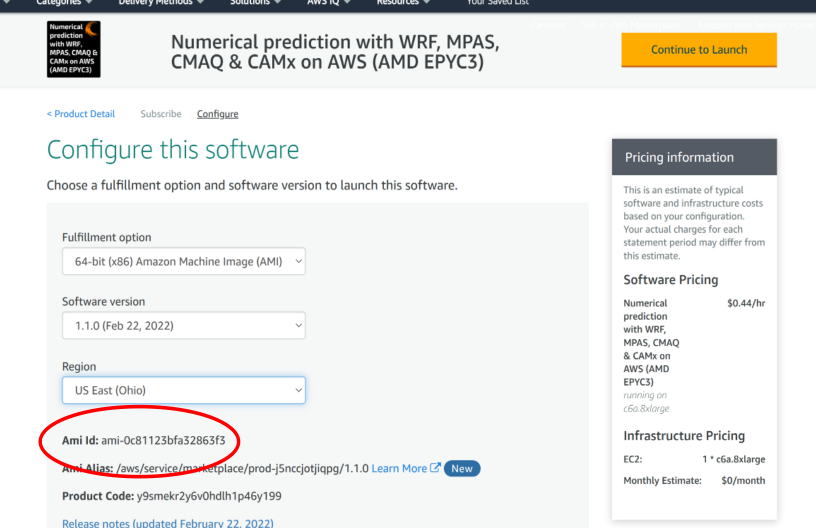

- You need to accept the Odycloud AMI licence. From the Odycloud marketplace, select the AMI you want, and then click on "Continue to Subscribe". You will be prompted to log into the AWS console; log in with the credentials for the AWS account in which RONIN is installed and subscribe to the software. This may take a few minutes to process. Click on "Configuration", change your region to the region in which RONIN is installed, and copy the AMI identifier, as below.

2. Now add the AMI to your RONIN service catalog from the RONIN interface, following instructions in "2. A community (non-RONIN) AMI".

After you have done this, the AMI will appear as an offering in your service catalog, as shown below.

Conclusion

We hope this blog post illustrates the power of the cloud, the power of Odycloud, and the power of RONIN, working together to make research computing easier. Teamwork makes the dream work!