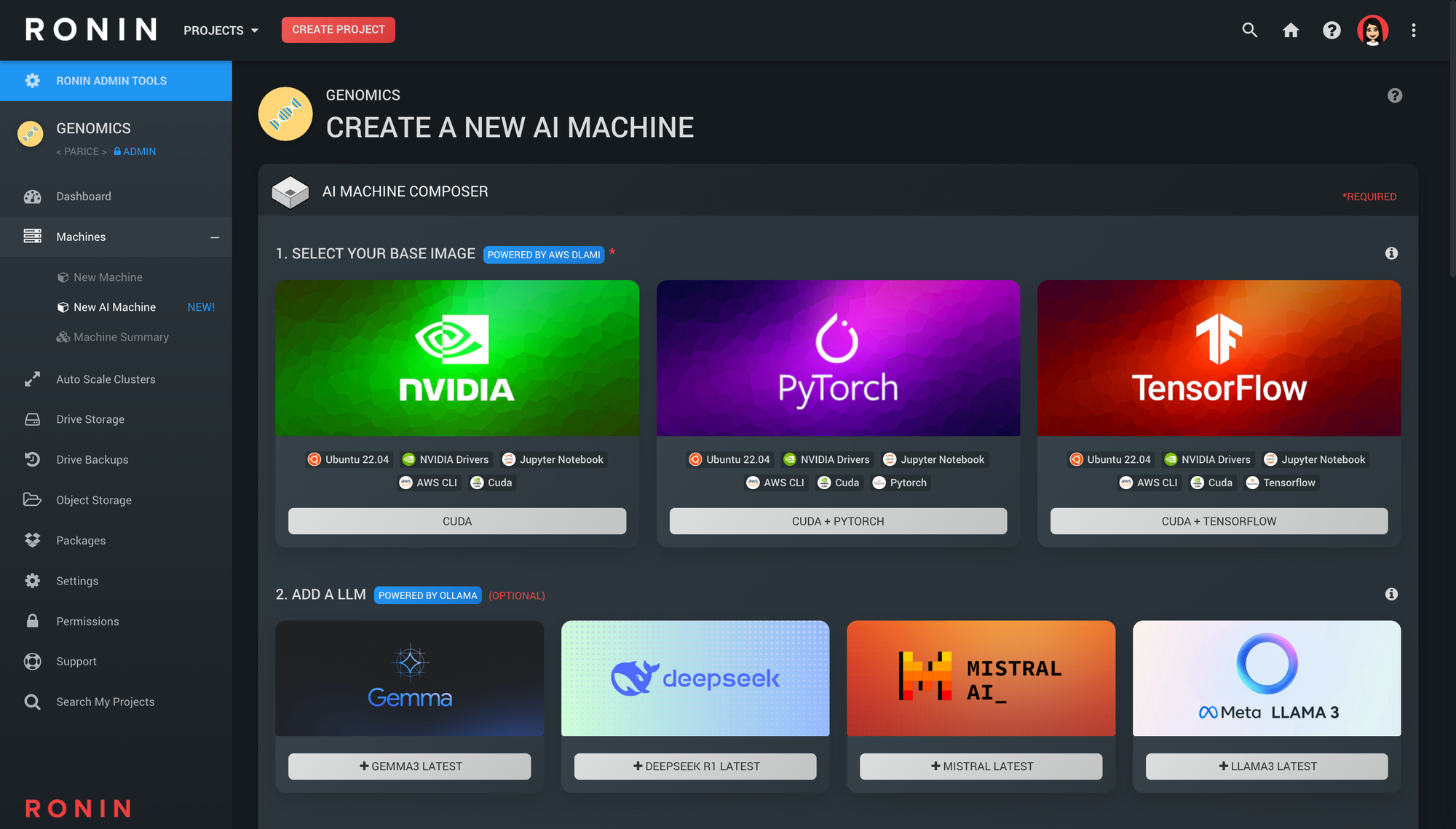

Start Building AI: A New Way to Deploy LLMs in RONIN

Whether you need a sandbox for LLaMA, a secure environment for sensitive data, or just raw GPU power without the setup costs, RONIN can handle the heavy lifting.

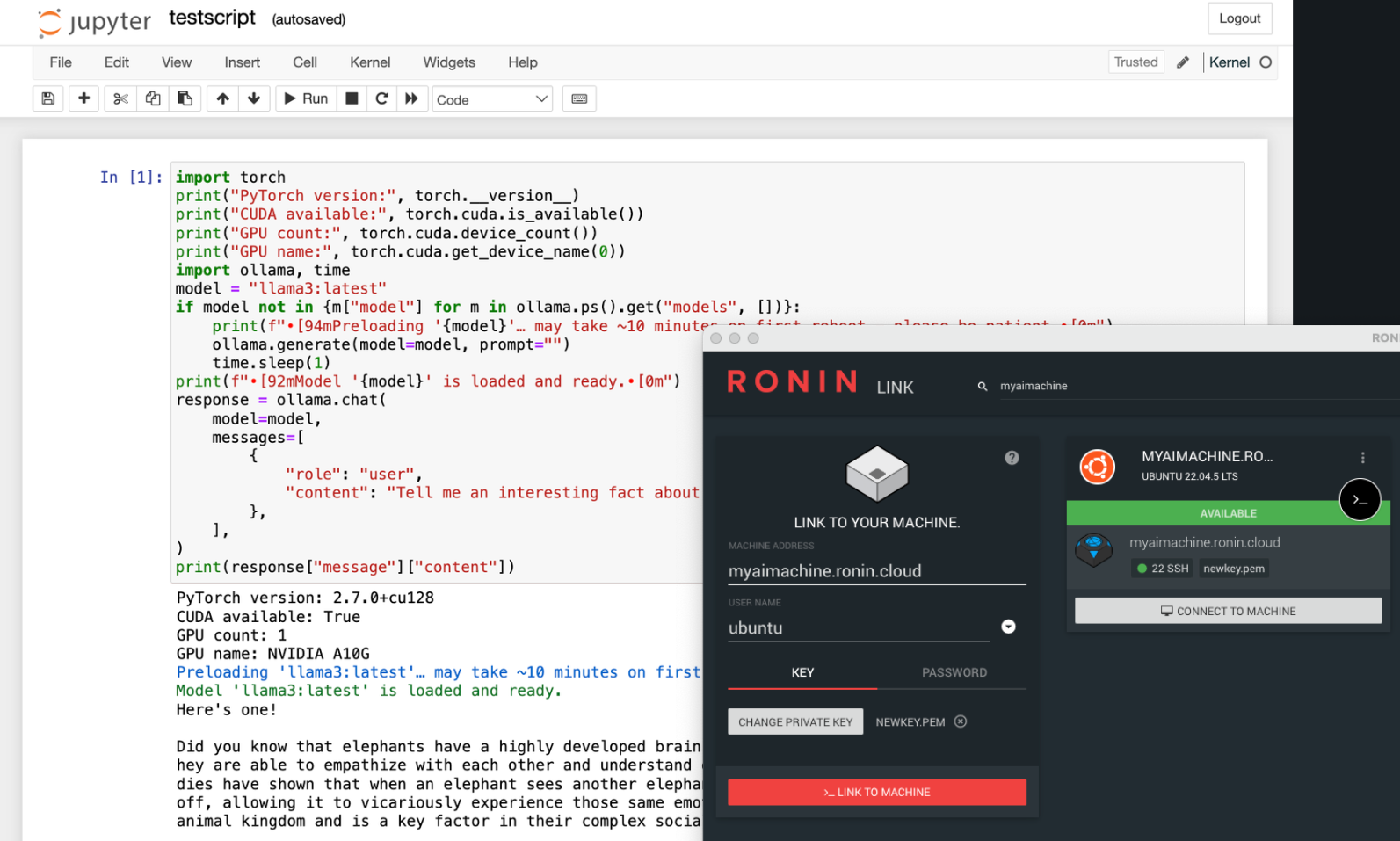

We all know the promise of AI—Generative text, coding assistants, and deep learning magic. But for anyone trying to build or tune models locally (especially to keep sensitive data secure), the reality is often less "magic" and more "headache." Between configuring NVIDIA drivers, wrestling with PyTorch versions, and debugging Jupyter environments, you often spend more time acting as a SysAdmin than a Data Scientist.

That's why we built the new RONIN AI Machine workflow to solve exactly this. We’ve automated the chaos so you can skip straight to the code. Whether you need a sandbox for LLaMA, a secure environment for sensitive data, or just raw GPU power without the setup costs, we handle the heavy lifting.

Key Features:

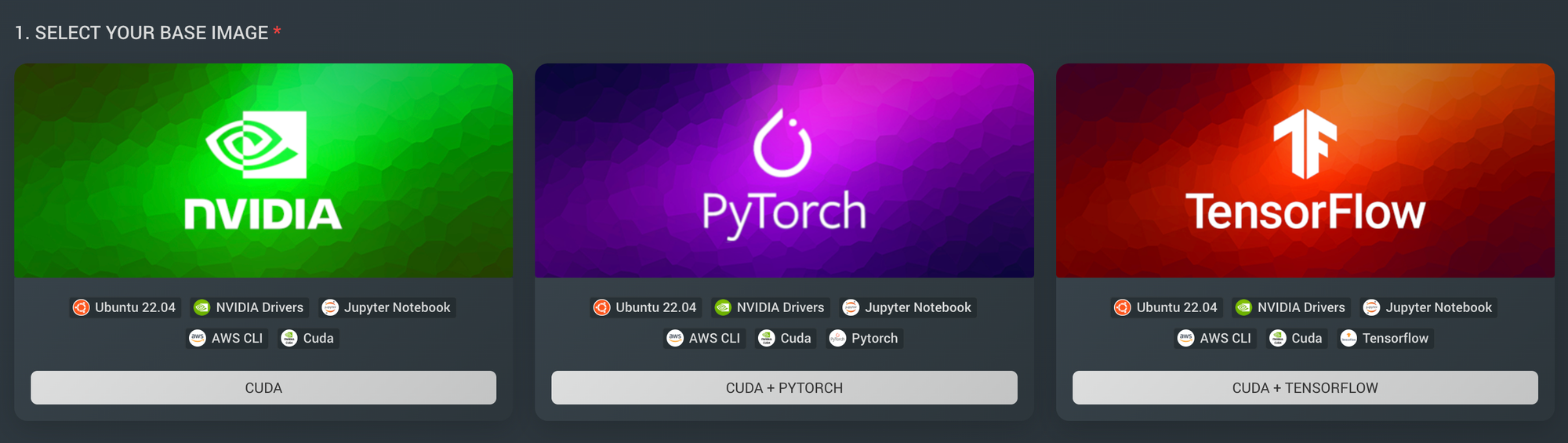

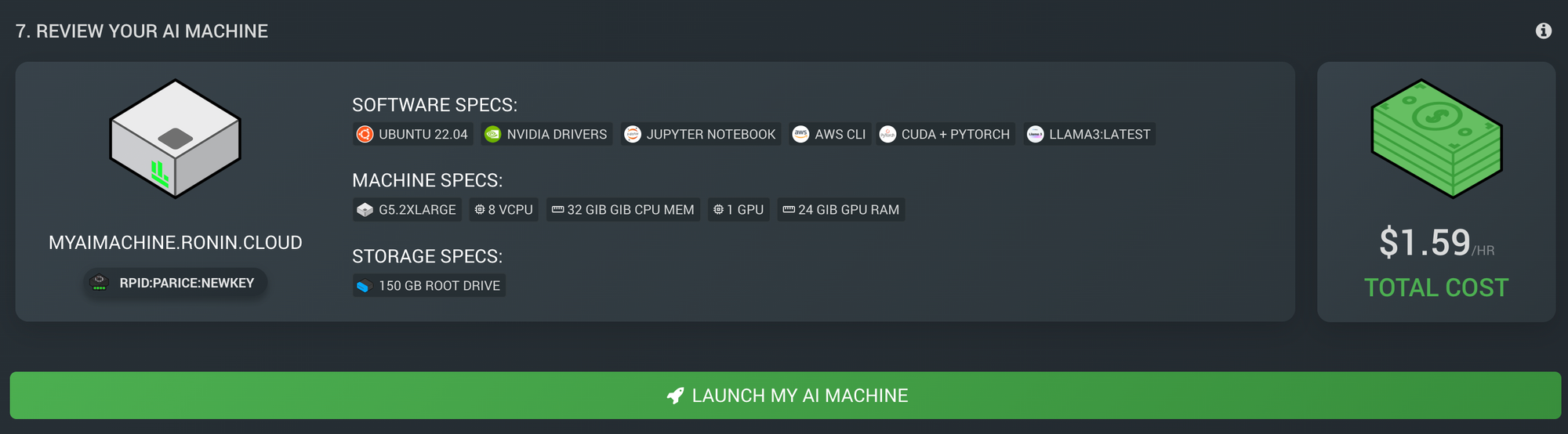

- One-Click Environments: We deploy AWS Deep Learning images pre-configured with CUDA, PyTorch, or TensorFlow.

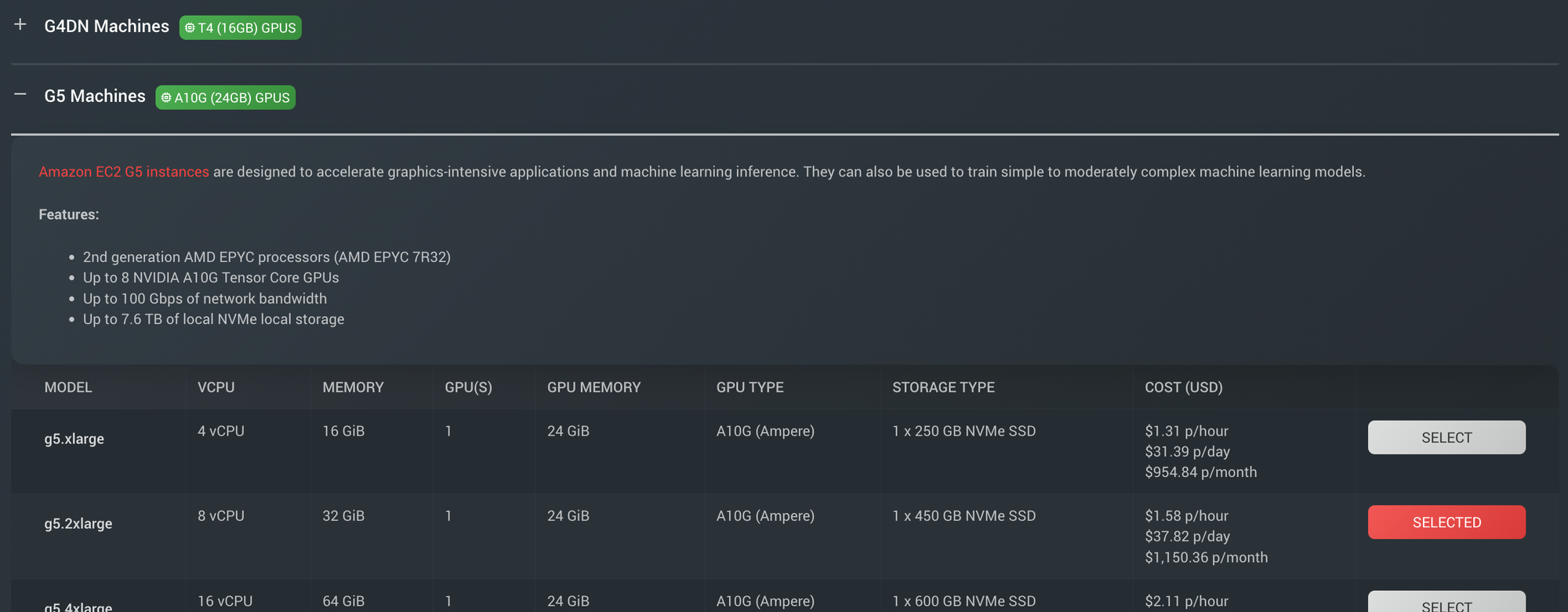

- Right-Sized Power: Easily select G4, G5, or G6 instances to balance performance with cost (starting as low as ~$1.50/hr).

- Secure & Private: Your data stays on your machine, accessible via secure SSH and our seamless RONIN LINK desktop app.

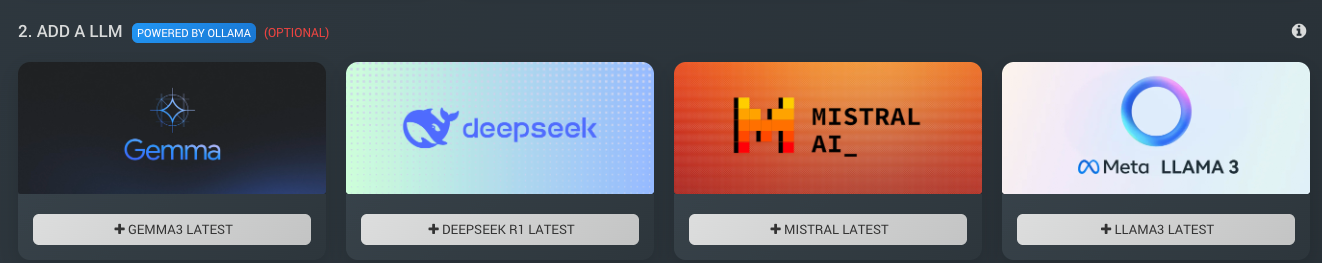

- Instant LLM Integration: Select and auto-install popular models (via Ollama) like LLaMA, Mistral, or Gemma during launch.

- Cost Prediction: Understand how much this machine costs BEFORE it's launched!

... and all the other amazing features of RONIN

If you already have a RONIN, then what are you waiting for! Here's our blog link on how to create your AI Machine!

If you don't, stop Googling error codes and start training AI models with RONIN!