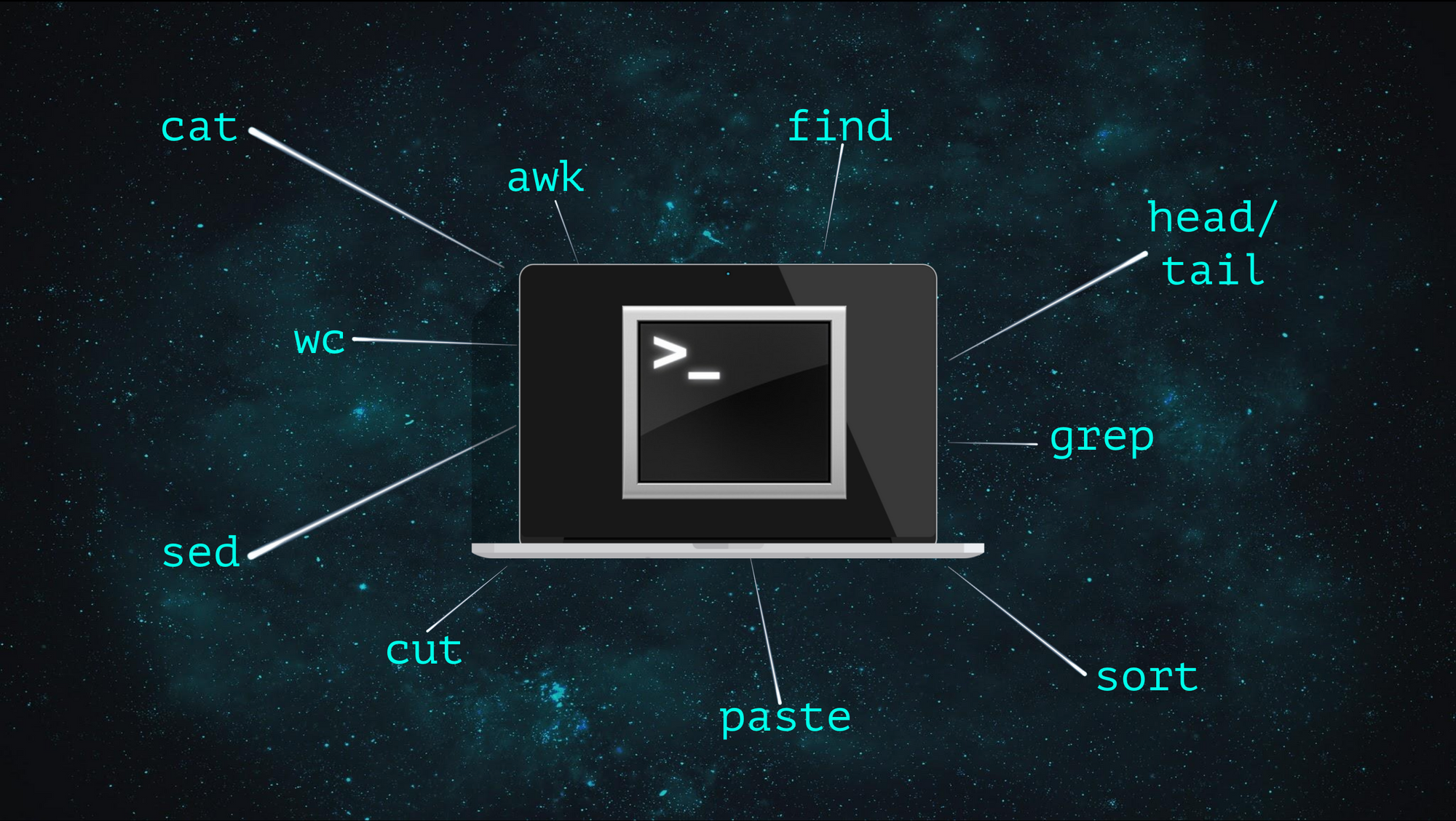

10 Simple Linux Commands Every Researcher Should Know

Being able to work with and manipulate files directly via the command line is one of the most useful skills to develop when working in the cloud. This blog post will introduce you to 10 common Linux commands that are crucial for your analysis artillery.

Being able to work with and manipulate files directly via the command line is one of the most useful skills to develop when working in the cloud.

This blog post will introduce you to 10 common Linux commands that are crucial for your analysis artillery:

- wc

- cat

- head/tail

- grep

- sed

- cut

- paste

- sort

- awk

- find

wc

The wc command can be used to count the number of lines, words and characters in a file:

wc myfile.txtTo get just the number of lines, use the -l flag:

wc -l myfile.txtTo get just the number of words, use the -w flag:

wc -w myfile.txtTo get just the number of characters, use the -c flag:

wc -c myfile.txtcat

cat will print the contents of a file to the screen:

cat myfile.txtMultiple files can be printed one after the other, which can be useful for joining multiple files together:

cat file1.txt file2.txt > myjoinedfile.txtTo count the number the lines that are printed with cat use the -n flag:

cat -n myfile.txtTo view all non-printing characters, use the -v flag:

cat -v myfile.txtMultiple flags can be used together:

cat -nv myfile.txtCheck out the manual for other flags that might be useful:

man cathead/tail

Sometimes you might not want to view a whole file, but rather just a small portion to have a glimpse at what the file looks like.

head allows you to view the top of the file — by default the first 10 lines of the file are printed to the screen:

head myfile.txttail allows you to view the bottom of the file — by default the last 10 lines of the file are printed to the screen:

tail myfile.txtYou can specify the number of lines printed using the -n flag:

head -n 50 myfile.txt

tail -n 20 myfile.txtFor the tail command, you can add a + before the number of lines, which will instead print all lines starting from that line number to the end of the file. For example, to print all lines from line 25 to the end of the file:

tail -n +25 myfile.txtYou can therefore combine head and tail commands to print a certain range of lines. For example, to print lines 50 to 55:

tail -n +50 myfile.txt | head -n 5Another handy tail feature to know about is the -f flag, which follows the end of the file as additional data is appended to it. For example, you can use the -f flag if you want to monitor a log file as it is being written to:

tail -f mylog.txtgrep

grep stands for "global regular expression print". It allows you to search for a string of characters (called a regular expression) in a specified file, and print all lines containing the search string to the screen. For example, to search for the word "error" in a file:

grep "error" myfile.txtBy default, grep is case sensitive, but you can make it case insensitive using the -i flag:

grep -i "error" myfile.txtIf you only want to count how many lines contain your search string, use the -c flag:

grep -c "error" myfile.txtTo print the lines containing your search string with their line numbers in the file, use the -n flag:

grep -n "error" myfile.txtTo print all lines that DON'T contain your search string, use the -v flag:

grep -v "error" myfile.txtTo treat your search string as a word (i.e. the search string must be surrounded by white space and not included within another string), use the w flag:

grep -w "error" myfile.txt

#Will find lines including "error" but not "errored" etcThe search string can include special characters to help narrow down your results. For example the ^ can be used to find strings that appear only at the start of a line, while the $ can be used to find strings that appear only at the end of a line:

#Find lines that start with "error"

grep "^error" myfile.txt

#Find lines that end with "error"

grep "error$" myfile.txtWildcards can also be used within the search string to broaden your results. For example, the . character can be used to represent a single letter, number or character, while the * can be used to represent any number of letters, numbers or characters:

#Find all strings starting with "c" and ending in "h"

grep "c.*h" myfile.txtComplex search strings can be specified by using several regular expressions. The -E flag also allows for extended regular expressions when needed. We won't go into more detail here, but please refer to this great post on regular expressions in grep for more information.

sed

The most common use for the sed command is for string substitution (find and replace). sed has a very specific command structure as follows:

sed 's/find/replace/' myfile.txtFor example, to replace "sample1" with "ID2023" in myfile.txt and print the result to the screen:

sed 's/sample1/ID2023/' myfile.txtBy default, sed will only replace the first occurrence of the string in a line. To replace a certain number of occurrences, you can add the desired number of occurrences after the 3rd /:

sed 's/sample1/ID2023/3' myfile.txtTo replace all occurrences in a line, add a g (which stands for global) at the end instead of a number:

sed 's/sample1/ID2023/g' myfile.txtTo only replace occurrences on particular lines within the file, add the line number, or range before the s/:

#Perform substitution on line 3 of the file

sed '3 s/find/replace/g' myfile.txt

#Perform substitution on lines 3-9 of the file

sed '3,9 s/find/replace/g' myfile.txtsed can also be used to delete specific lines from a file:

# Delete the 5th line of the file

sed '5d' myfile.txt

# Delete the last line of the file

sed '$d' myfile.txt

# Delete lines 3-5 of the file

sed '3,5d' myfile.txt

# Delete lines containing "pattern"

sed 's/pattern/d' myfile.txtInstead of deleting lines with d, lines can also be appended or inserted using a or i respectfully, as demonstrated in this helpful post.

Since sed prints the result to the screen by default, to save the changes you have made you either need to direct the output to a file, OR use the -i flag to make the changes in the original file:

#Save to new file

sed 's/sample1/ID2023/3' myfile.txt > myalteredfile.txt

#Change original file

sed -i 's/sample1/ID2023/3' myfile.txtcut

The cut command allows you to easily extract particular columns from a file. For example, to extract columns 1 and 3:

cut -f 1,3 myfile.txtThe default field separator for cut is the tab character. You can specify a different delimiter using the -d flag. For example, to extract columns 1-9 from a comma separated file:

cut -d ',' -f 1-9 myfile.csvThe output delimiter will default to the input delimiter, but this can be changed using the --output-delimiter flag:

cut -d ',' -f 1-9 myfile.csv --output-delimiter=':'paste

The paste command allows you to join files horizontally whereby each line of the specified files will be joined by a tab, turning each line into a column. For example, if you had a list of names in a file called names.txt and a list of ages in a file called ages.txt, you could create a single file with the first column being names, and the second column being age like so:

paste names.txt ages.txt > output.txtTab is the default delimiter but this can be changed using the -d flag:

paste -d ',' names.txt ages.txt > output.csv paste can also be used to merge consecutive lines from a file into a single line with each line being separated by a tab. This allows files to be used as rows instead of columns (i.e. transposing the data). For example, to create a file where the first line includes all of the names from names.txt and the second line includes all of the ages from ages.txt:

paste -s names.txt ages.txt > transposed.txtTo input a file from standard input, you can pipe it to paste and use - like so:

# Pass names as the first column, and add ages as the second column

cat names.txt | paste - ages.txt'

# Pass names as the second column, and add ages as the first column

cat names.txt | paste ages.txt -sort

The sort command is useful for sorting lines or columns within a file. By default, sort will order based on the characters at the start of the line in this order:

- Numbers in ascending order

- Letters in ascending order (lowercase first, then uppercase)

sort myfile.txtTo reverse the order i.e. sort in descending order, use the -r flag:

sort -r myfile.txtSince the default sorting will sort numbers by character rather than by their numeric value (i.e. 11 would come before 2), to instead sort by numeric value, use the -n flag:

sort -n myfile.txtMultiple flags can be used together to achieve the desired order:

sort -rn myfile.txtTo sort the file based on a particular column rather than the start of the line, use the -k flag:

#Sort based on column 2 in default order

sort -k2,2 myfile.txt

#Sort based on column 2 in numeric order

sort -k2,2n myfile.txt

#Sort based on column 2 in default order, then by column 1 in descending order

sort -k2,2 -k1,1r myfile.txtTo sort the file and then remove duplicates, use the -u flag:

sort -u myfile.txtawk

awk is a simple, yet incredibly powerful Linux command for working with column-based data. awk has too much functionality for us to describe in detail here, but there are a range of great online tutorials that cover awk in more depth. Jonathon Palardy's awk tutorial is excellent for beginners!

The basic structure of an awk command is as follows:

awk 'condition {action}' myfile.txtEach line of the file is checked against the condition and then the action is performed on matching lines. Either a condition or action must be given, if one is absent it will resort to the default behavior:

- The default condition is that the action will be performed on all lines of the file

- The default action is that the whole line will be printed if it satisfies the condition

In awk commands, $ are used to represent column numbers and $0 represents the entire line (i.e. all columns).

Let's take a look at some examples to show how the conditions, actions and columns work in an awk command:

# Print the 1st and 3rd columns of the file

awk '{print $1, $3} myfile.txt

# Note: no condition was given so the 1st and 3rd columns of EVERY line will be printed by default

# Print lines where the 2nd column is greater than 100

awk '$2 > 100' myfile.txt

# Note: no action was given so the default is to print the entire line i.e. {print $0}

# Print the 1st and 3rd columns of lines where the 2nd column is greater than 100

awk '$2 > 100 {print $1, $3}' myfile.txt

# Note: both a condition and action is specifiedIn the examples above we showed how the condition can use comparison operators such as ==, !=, <, >, <=, >= to perform the action on lines satisfying the condition. The condition can also use regular expressions to match particular strings, for example:

# Print lines where the 2nd column starts with 2020

awk '$2 ~ /^2020/' myfile.txt

# Print lines where the 2nd column DOES NOT start with 2020

awk '$2 !~ /^2020/' myfile.txt Multiple conditions can also be specified using the AND && or OR || operators like so:

# Print lines where the 2nd column starts with 2020 AND the 5th column is less than 100

awk '$1 ~ /^2015/ && $5 > 100' myfile.txt

# Print lines where the 2nd column starts with 2020 OR the 5th column is less than 100

awk '$1 ~ /^2015/ || $5 > 100' myfile.txtThe default field separator of awk is any whitespace (tabs or spaces etc). To change this, use the -F flag:

awk -F ',' '{print $1, $2}' myfile.csvThere are tons of other useful features of awk like the ability to use both custom and built in variables like NR, NF, and FNR for managing different lines and files, as well as special controls like BEGIN, END, and next to control when certain actions are performed. You can even use arrays in awk!

As you can tell, awk can get pretty complex to fit your exact needs, but here we just wanted to introduce you to the command. Often the best way to learn awk is to start simple and work your way up as you think of new use cases. It can take a little bit of time to learn, but we promise it is definitely worth it!

find

Last, but certainly not least, the find command. As the name suggests, this command allows you to search for particular files or directories within a given file hierarchy.

For example, to list all files and directories within and under the current directory:

find ./Other flags can be used to narrow your search. For example, to look for files or directories with a particular name, you can use the -name flag:

# Find all txt files within and below the current directory

find ./ -name *.txtYou can also specify whether you are looking for a regular file or directory with the -type flag:

# Find a directory called "rawdata" from the current directory

find ./ -type d -name rawdata

# Find a file called "stats.txt" from the current directory

find ./ type f -name stats.txtThe default action of find is to print a list of found files to the screen. If you wish to execute a command on the found files, use the -exec command with {} as a placeholder for each file and /; to denote the end of the command (this ensures the command is run on each found file separately):

# Find all txt files within and below the current directory and remove them with confirmation

find ./ -type f -name *.txt -exec rm -i {} \;

# Find all bam files within and below the current directory and run myscript.sh on them

find ./ type -f -name *.bam -exec ./myscript {} \;Note: Multiple -exec commands can be run if needed but the latter commands will only be run if the previous completes successfully:find . -name "*.txt" -exec cat {} \; -exec grep sample1 {} \;`

There are lots of other flags that may be useful when running find, such as finding files based on their size, modification time, or permissions. Refer to the manual for more information man find.

The Pipe Command

Now that you have been introduced to the functionality of the 10 helpful tools above, one of the biggest advantages of these commands is the ability to easily string them together using the pipe command: |

The pipe commands allows the output from on command to be passed on as the input to the next command. For example, we may want to use the sort command to remove duplicates in a file, and then use the wc command to count the number of unique lines remaining:

sort -u myfile.txt | wc -l Or we may want to extract particular lines from a file based on a search string with grep, replace a sample name with another ID using sed, extract the first and third column using cut, and then check that this is giving us the required result using head before we decide to save it to a file:

grep "sample1" myfile.txt | sed 's/sample1/ID2098/g' | cut -f 1,3 | headWhen piping these simple commands together, you can see just how much can be achieved in a single line of code!

So, with these 10 simple Linux tools in your belt, you're now ready to tackle any files that come your way!